3D Photos

Inception

In 2018 I joined a team of super talented research scientists and engineers known for their world-class white papers at SIGGRAPH. My role was to turn these white papers into products.

One particular workstream focused on depth in media with one project focusing on traditional photogrammetry and world reconstruction using phones. We found out pretty quickly through user research that full photogrammetry was too much of a time commitment to capture and process for most people to find valuable. Even if they did find this extra fidelity to relive their memories valuable.

However, we had a timely opportunity. iPhones were just released with “portrait mode” which included a new depth photo format. This is how 3D photos were born. It hit the sweet spot of low user effort with a high reward; bringing their memories to life through a single click capture.

Design

Turning depth data into a 3D photo wasn’t a one-and-done process. As a team of tech artists, engineers, researchers, and designers, we were able to figure out how to create a performant 3D mesh, in-paint the background to be the most visually appealing, and most difficult of all, define the interactions for consuming the 3D content on each of Meta’s platforms.

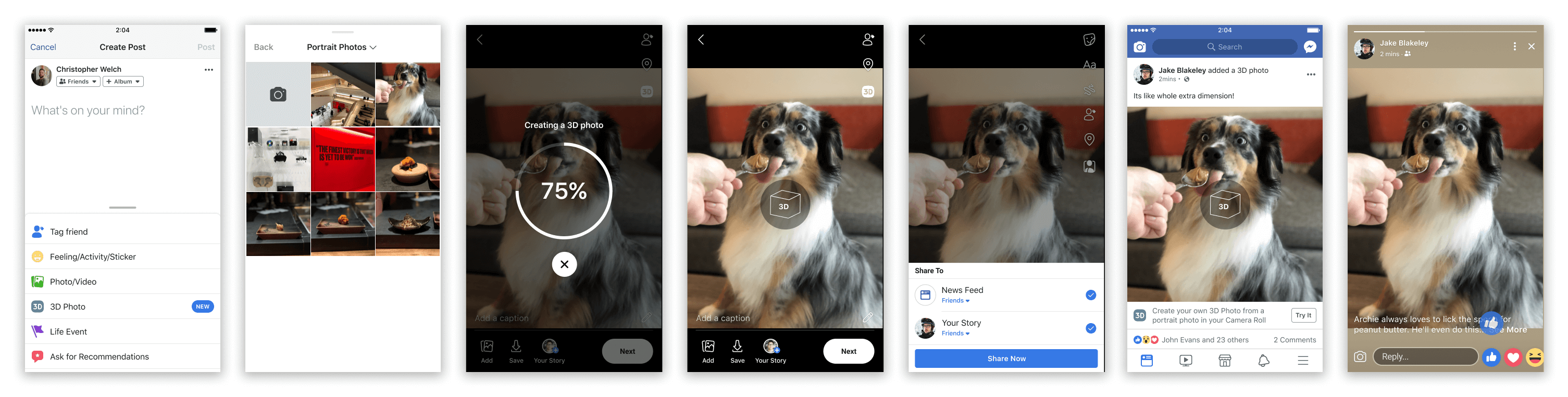

As a designer, I was particularly interested in creating something with high visual quality for the broadest types of content, educating users about how to interact with 3D photos, and how users would discover how to create them.

This was a particularly interesting project as we had to collaborate on solutions across Meta’s portfolio of apps. We had to define interactions for consuming 3D photos on a scrolling feed and swipable storys, as well as collaborate with IG and FB on the best ways to add creation ingress points in their composers.

Growth

Our first win was getting a “make 3D” button inside Facebook’s very coveted composer for iOS users. This allowed us to get enough depth photos, and training data, to eventually launch to all devices and hallucinate depth on any photo. However, like many incubation teams, our work was handed off to growth teams once the product showed success. It later landed on VR in the browser home and as an ad format to add motion to static photos with a single click.